Welcome to 2017. I’ve been feeling old lately. Part of the sensation comes from the fact that the world I am presently inhabiting, and the world that I can see emerging, is fundamentally different from the one that I was born into, and in which my basic social sensibilities developed. Nowhere is this more apparent than in the domain of privacy. I have no doubt that my childhood – the 1970s – will be looked back upon as the golden age of anonymity, and thus in a sense, of individual freedom. I was watching a ’70s movie the other day, in which a couple of detectives were chasing a criminal by car, heading for the state line. The criminal eludes them, and so they head back to town. On the way back, they stop at a pay phone, where the detective calls headquarters to give them an update. I had to explain to my kids that, in the old days, once the police were out of range for radio contact, the only way they could communicate with the station was by finding a telephone.

I can still recall the feeling, of being in a city, but being completely incommunicado – no one knew where you were, no one could get in touch with you – and having this be normal. It is, of course, possible to recreate these conditions, but doing so now is inherently suspicious. As soon as you reappeared, you would have a dozen messages from people saying “where have you been?”

This is all a well-known consequence of cell phones. There are, however, a number of far more intrusive technologies on the horizon that will completely eclipse the sort of stuff that we have been worrying about so far. Perhaps the most extraordinary is the eye-tracking VR headset developed by Fove. I hadn’t really been paying attention to this until a friend of mine took a job there and explained to me the significance of the technology.

First, let me say that there is a perfectly reasonable technical reason for Fove to want to develop a headset that does what theirs does. As anyone who knows a bit about human vision can tell you, the impression we have that we perceive an image of the world – like on a movie or TV screen – is largely an illusion. In fact what we actually see, or at least see clearly, is a very small focal area in our visual field, with the rest roughed in by our brains. It is because our eyes are capable of very rapid movement to any point within the visual field, and that they tend to flit about (in rapid movements known as saccades, of which we are capable of around 3 per second), that we get the impression of looking at an image, all of which is in focus.

Now consider VR technology. (By the way, for anyone who has never experienced high-definition VR, I highly recommend it. Within a few minutes, you will understand why this technology is a very, very big deal.) Computationally, VR is extremely demanding, because it has to generate a very large image, and it has to update it very quickly, so that the user will not notice any lag between head movements and changes in the image. (Consider what happens when the user jerks his or her head 90 degrees to the left – a huge update to the image has to occur very, very quickly. Also, because it’s your head that is doing the moving, and your visual cortex is in your head, the update has to occur much faster than when you move your hand on a controller.)

So the amount of graphics processing involved in VR is gigantic. At the same time, most of this processing is wasted. Why? Because standard VR creates a virtual world for us to look at – that is, it generates a complete high-resolution image, all fully textured and rendered, all in focus. But then the user only looks at a tiny little piece of it! So in principle you don’t really need the entire image to be in focus, or fully textured, or to have all the shading done properly, etc. You only need to make sure that the part the person is looking at is done properly in high-resolution, the rest can be roughed in.

This is basically what the Fove system does. The goggles track your gaze, and render only the part that you are looking at in high resolution. This video demonstrates the effect pretty well:

I’ve never had a pair on, but having tried an HTC Vive, and seeing how the technology works, I have no doubt that they are able to create virtual environments that are practically indistinguishable from reality. If not, they will be soon.

Technologically, this is all fine and everything, but from a philosophical perspective, what the Fove goggles are actually doing is a form of mind-reading. In the same way that the “image” that we appear to have before us is largely an illusion, so is the idea that we create a detailed representation of the world around us. Not only are your eyes, in a sense, part of your brain, but your eye movements are actually a functional part of many thought processes. Here is Andy Clark:

The visual brain is opportunistic, always ready to make do and mend, to get the most from what the world already presents rather than building whole inner cognitive routines from neural cloth. Instead of attempting to create, maintain, and update a rich inner representation (inner image or model) of the scene, it deploys a strategy that roboticist Rodney Brooks describes as “letting the world serve as its own best model.” Brooks’s idea is that instead of tackling the alarmingly difficult problem of using input from a robot’s sensors to build up a highly detailed, complex inner model of its local surroundings, a good robot should use sensing frugally in order to select and monitor just a few critical aspects of a situation, relying largely upon the persistent physical surroundings themselves to act as a kind of enduring, external data-store: an external “memory” available for sampling as needs dictate. Our brains, like those of the mobile robots, try whenever possible to let the world serve as its own best model.

(This quote is from a longer discussion of “neural opportunism” in Clark’s book, Natural Born Cyborgs, which I highly recommend reading.)

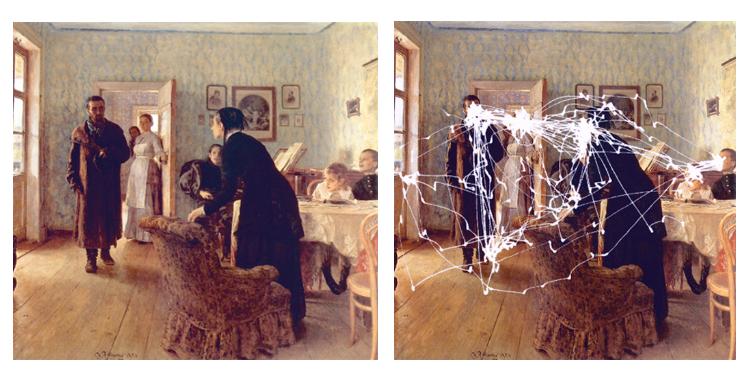

Instead of making a “copy” of the world into memory, then using that representation as a basis for thinking, what we do instead is use the world itself as a point of reference, copying into memory only the information that we need in order to find what we need in the world. This is revealed in the pattern of eye saccades across an image, when a person is asked to look for specific information, or to solve a particular problem. Information is drawn from the world only when it is required for a particular cognitive operation. Thus one can, in many circumstances, literally tell what a person is thinking by tracking his or her eye movements. (I am inclined to agree with extended mind theorists, like Clark, who say that the eye movements are part of the thought process.)

I trust it is sufficiently obvious how much of a challenge this technology presents to traditional notions of privacy. It is ridiculously intrusive. It gives technology firms better access to certain of your thoughts than you yourself (i.e. your own conscious mind) have. To get a sense of this, consider the following image, and superimposed on the right, a typical pattern of saccades of a person looking at the image:

Now suppose that the image is an advertisement, or a webpage with multiple advertisements. Do you want Facebook to have information of the sort revealed on the right, by your pattern of eye movements across the image?

To take an obvious example, it wouldn’t require much ingenuity to figure out a great deal about a person’s sexual preferences by studying their eye movements. Recent research has shown that measuring pupillary response can be used to distinguish heterosexual from homosexual men, as well as to detect pedophilic responses (see Janice Attard-Johnson, Markus Bindemann and Caolite O Ciardha, “Heterosexual, Homosexual, and Bisexual Men’s Pupillary Responses to Persons at Different Stages of Sexual Development”). This is slightly different from tracking eye movements, but technologically no more challenging.

Recall the old “my TiVo thinks I’m gay” story. In this case, your entertainment system might actually know that you are gay, even before you figure it out yourself. How hard would it be to figure out what a person finds most arousing, then modify the content of an advertisement to cater to that sensibility? More worrisome is the ability to detect pedophilic preferences — even though it is only detecting prefences, not behaviour, it starts to move us into Minority Report territory (assuming that present attitudes toward pedophilia remain unchanged).

One last example, before we are done. My colleague here at UofT, Kang Lee, has been working on some technology that permits detection and analysis of capillary blood flow patterns in people’s faces, using an ordinary video camera. His particular interest is in lie detection, but it can also be used to detect a wide range of emotions:

I suggested to him that he get someone to hack an Xbox Kinect and create a version that works on that system (since the Kinect camera already does the facial colour detection that his software relies upon). Watching the video, however, you realize that the technology could be integrated into Skype, so that as you are talking to a person, your computer could be doing real-time analysis of their emotions, stress or anxiety levels, with perhaps a dashboard display showing the results.

What all of these technologies have in common is that they challenge the privacy of the mental — something that most of us grew up taking for granted, but that seems unlikely to continue.

«the technology could be integrated into Skype, so that as you are talking to a person, your computer could be doing real-time analysis of their emotions, stress or anxiety levels, with perhaps a dashboard display showing the results.» A dashboard display showing the result? You’re such an old school…the clothes we’ll be wearing (see David Eagleman’s sensory vest) will just ‘tell’ us what the other person is feeling (and tell us simultaneously how the stock market is doing.).

Really fascinating. I’m sure the technology will also be used in positive ways though. For example, pupils dilate when system 2 exerts mental effort, so we could design experiences that are more balanced and promote critical engagement.

I struggle to worry too much that the pupil-dilation thing could ever really amount to much in terms of usable tech. If I’m reading their results right, it’s something like 5-8% change in pupil size under lab conditions, which we can imagine to be fairly tightly controlled. In the real world pupil size is constantly fluctuating for all sorts of reasons and I very much doubt that any system, no matter how technologically advanced, would be able to pull much signal out of what would probably be an incredibly large amount of noise. Given how poorly online advertising targeting remains, even with the incredible amount of data available to firms like google, we’re some distance away yet. And furthermore, I imagine that any false positives could lead to pretty serious lawsuits depending on the ultimate consequences.